About ACAI Tutorials at ESSAI 2025

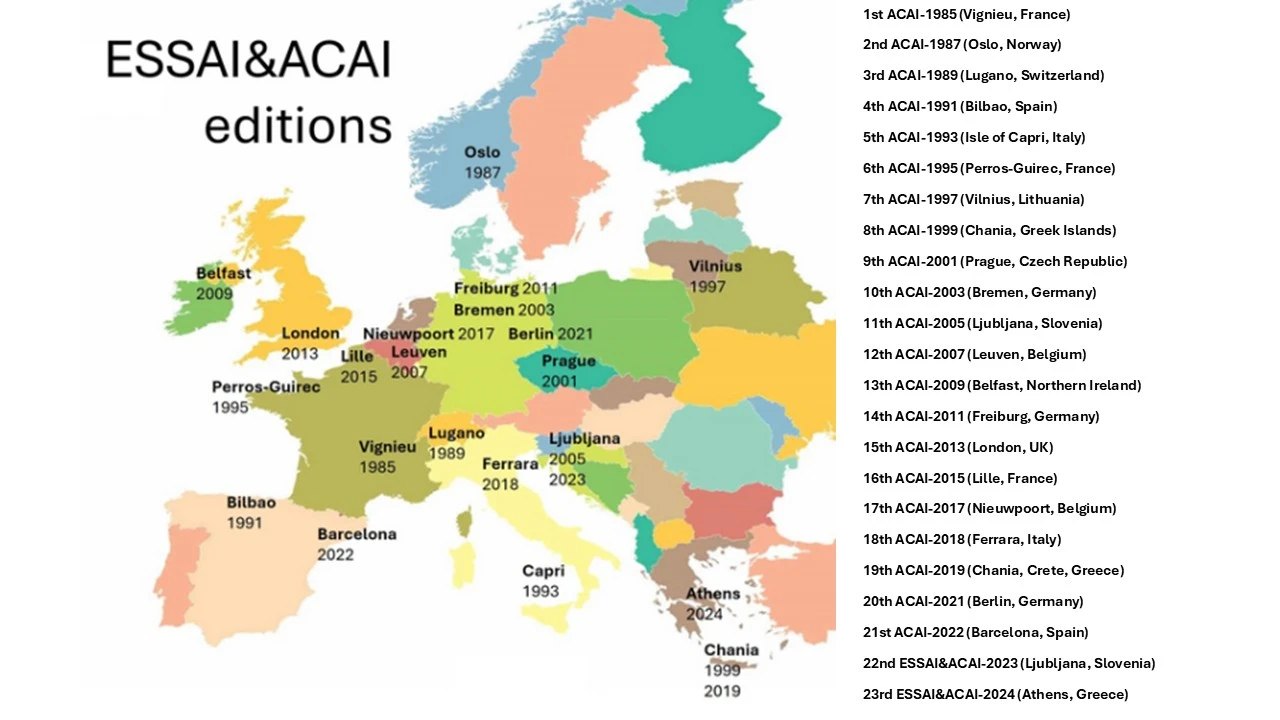

The Advanced Course on Artificial Intelligence (ACAI) is a long-standing initiative of the European Association for Artificial Intelligence (EURAI). Since 1985, ACAI has provided in-depth tutorials on current research topics in AI, typically delivered in focused sessions lasting 60 to 90 minutes. Over the years, ACAI has been hosted in various European locations and has contributed to the development of AI education and collaboration within the research community.

Since 2023, ACAI has been integrated into the European Summer School on Artificial Intelligence (ESSAI) as a dedicated track of topical tutorials. The 1st edition of ESSAI&ACAI was held in 2023 in Ljubljana, Slovenia and was extremely successful, attracting over 500 participants.

- The event pictures are available on this page.

- The video lectures are available on this page.

In 2024, the 2nd edition of ESSAI&ACAI was celebrated in Athens, Greece, and received excellent feedback from the student and lecturer participants. All ESSAI courses are available on the ESSAI-2024 website.

The 2025 edition, hosted in Bratislava, includes six ACAI tutorials prepared by the Local Organizing Committee. These sessions are intended to complement the broader summer school curriculum by addressing emerging areas of interest, methodological developments, and interdisciplinary approaches. The selected contributions reflect ongoing work in federated learning, quantum computing, biomedical AI, vision-language integration, and foundational language modelling.

ACAI Tutorials – ESSAI 2025

This tutorial introduces federated learning, including its core algorithm (FedAvg), privacy and security challenges (e.g. Byzantine workers), and recent advances in personalization and efficiency. An overview of economic motivations and practical deployment considerations is also included.

The tutorial covers strategies for learning with limited labeled data in medical imaging, including synthetic data generation and domain adaptation. It also introduces implicit neural representations as a tool for flexible image reconstruction.

This session examines the extent to which large language models (LLMs) learn and represent linguistic knowledge. It considers experimental evidence from training under extreme conditions and reflects on the implications for language understanding and task performance.

This session outlines key concepts in quantum computing and explores how machine learning methods are used within quantum workflows. It also introduces the field of quantum-assisted machine learning, focusing on current capabilities and open questions.

A review of developments in vision-language models and self-supervised learning. The tutorial surveys architectures such as CLIP and Segment Anything, with attention to pretraining methods, embedding alignment, and open-vocabulary tasks.

This session presents applications of AI in analyzing genomic data for cancer research. Methods covered include traditional ML, deep learning, and large language models for detecting mutations and identifying therapeutic targets.